DamianHarty.com

Damian and Linda's Photography

Digital Photography

How Many Megapixels are Enough?

Are all image files the same?

- It depends how big you want to print - 2½ Megapixels is enough for normal size (6" x 4") prints

- It's not the Megapixels, it's the noise.

- All image files are not the same

- Shooting RAW files gives good control later

Resolution

When digital cameras were first introduced, there was a lot of confusion about the resolution required to prevent digital images becoming obviously "blocky" upon printing. Camera makers were keen to promote a simple story like "more megapixels are better" because it was an easy sell to a numerate but not very tech-savvy public.

The reality isn't that difficult although it require a little attentiveness to get through some of the twists and turns.

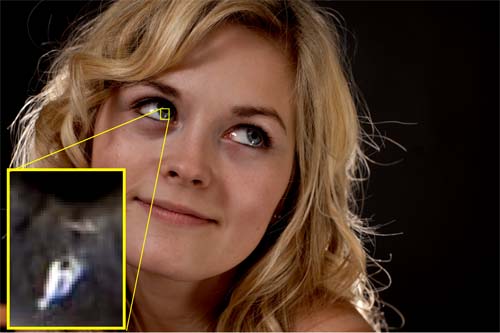

This is a great, sharp shot showing Emma's clear skin and moist eyes. Successful use of a hair light and an enigmatic expression make me really like this shot. It's easy to imagine it as a poster for something, with space for copy on the right of the image. "Wet-look Eye Drops", perhaps.

For it to be an advertising poster the question is "how big can it go?" This question also applies to an image that has been selectively cropped to improve the framing, and thus may not be as detailed as a "full frame" image.

This picture was taken with a Nikon D200 in my studio, and processed in-camera to a high quality JPEG file[1]. It displays the very low noise levels which have been typical of digital single lens reflex (DSLR) cameras for some time now. This "clean" quality became briefly very common in news photography before the ubiquitous "citizen reporter" with a smartphone took over. It is sometimes surprising to look back at historical photographs and realise how poor quality they were in comparison, apart from professional medium quality work that passes the test of time, like Alfred Palmer's World War 2 photos.

Back to Emma's picture. It measures 3872 picture elements ("pixels") by 2592 pixels. Each picture element is a square of uniform colour, as can be seen from the very small element of Emma's eye (the curve of the dark pupil can be seen across the top of the inset picture, and the light-coloured item is a patch of light reflected on Emma's eye). were just the inset panel printed at 4.7 cm high and 3.4 cm wide, it would show what this portion would look like if the whole image were blown up to 328 cm x 220 cm (10 feet 9 inches x 7 feet 2 inches). It's clearly somewhat blocky and yet we have all seen advertising images significantly bigger than this on posters, although admittedly we haven't necessarily got up close to them to see what the quality is like close-up.

Print resolution is usually defined by "dots per inch" (dpi) - in this context a dot and a picture element are interchangeable, although to be strictly correct a picture element is a data entity and a dot is a display or print entity. The sample eye piece above is at the equivalent of 30 dpi and it clearly reveals the digital nature of the underlying image.

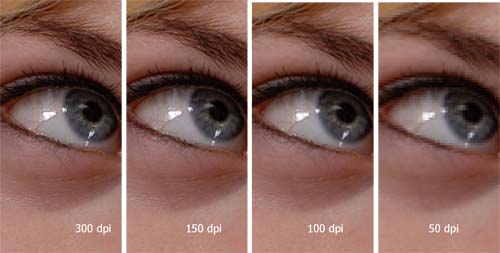

The original image is so finely digitised that it is a little difficult to comprehend the resolution effects, so a slightly larger piece of image has been taken and treated such that the resolution is reduced. This process is effectively making each pixel something like the average of its neighbours and is sometimes called "deresolving"; from a data processing perspective it is called "down-sampling". Note the detail in the eye is very much reduced when the image is deresolved.

It is immediately clear that lowering the resolution of the image degrades the quality and this is hardly a surprising conclusion. However, it is fair to say that the perception of the reduction in quality is nothing like linear; from 300 to 150 dpi most observers would be hard pressed to discern a real difference, even though 300 dpi is the accepted industry-standard for print-quality resolution. Of course, this can be significantly influenced by the print process in use and so it would be unwise to dismiss the 300 dpi guideline as unnecessary, but rather one that can be confidently used as input to any print process. Nevertheless, when printed on a high quality output device such as a modern photo printer using 250 g m2 photographic paper it is genuinely difficult to be disappointed by a 150 dpi output and even the 100 dpi output appears quite acceptable. Thus, straight from the camera it would seem that enlargements up to 65 cm x 44 cm (26" x 18") will give great quality and 98 cm x 66 cm (38" x 26") will remain acceptable for the 11 Megapixel D200.

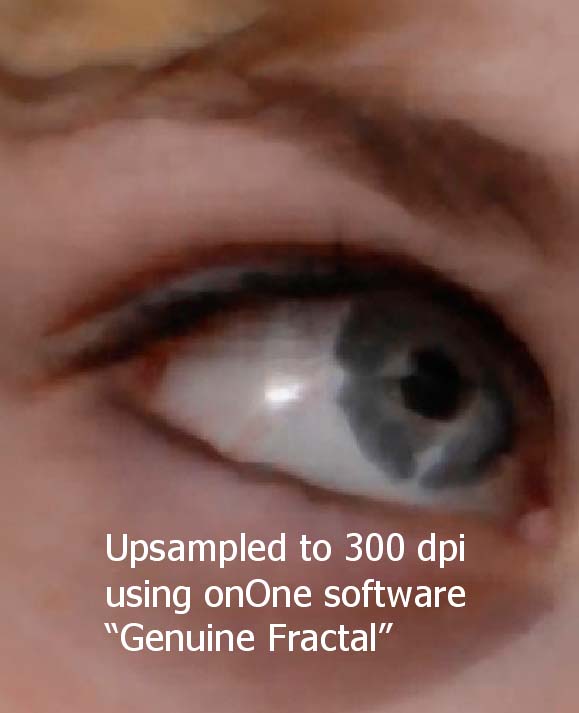

What if more is required? If we consider a 30 dpi version of Emma's eye, it is obviously blocky, above. If we want to print it at this physical size, adding more pixels is necessary to prevent the blocky appearance - but what colour should the new pixels be? The process of adding in data between existing points is called "up-sampling" in data processing and is sometimes referred to upward-resolving or "uprezzing" - a fairly horrible term that won't find its way into my dictionary any time soon. Adobe Photoshop offers a process for increasing the resolution of an image by inventing new pixels according to a defined algorithm - in this case the so-called "bicubic" algorithm that fits two cubic equations to the pixels around the pixel of interest - one horizontal and one vertical - and calculates new pixels using the fitted equations.

Another possible technology is the use of fractal equations[2] in the same manner, an equation is fitted to the pixels around the one of interest and new pixels calculated from the resulting equations - but this time the equations are for fractals, an elegant and compact form of equation that describes well some forms in nature.

Some extravagant claims are made for fractal technology but it can be clearly seen in this example that it is in no way equivalent to having the original data. Proponents claim greatly reduced photographic data requirements that can be up-sampled later to recreate an image of any size. To some extent those making these claims are either taking images with very little texture or else they are revealing their fundamental ignorance about the nature of digital data. A third explanation is that they are selling the software and adding a positive "spin". This level of confusion and misdirection is not unique to digital photography, and has been prevalent in digital audio for many years, where all sorts of nonsense has been invented to justify the choice of 44.1 kHz as a sample rate when in reality it was a compromise forced by the original design of the medium[3]; informed observers will note that modern sound studio equipment functions at 96 kHz and 24 bits because one cannot "put back" missing information - however often the producers of "CSI"[4] might imagine it is so. And thus it is with photography, too.

One thing that cannot be argued is that the visual quality of the fractal enhancement is significantly more pleasing to the eye than the bicubic method. This is something that cannot be justified analytically but plainly explains the widespread popularity of the fractal method for enlarging pictures beyond their "technical" maximum size for an uncritical audience. It definitely produces natural-looking pictures and disguises their digital nature.

So how many megapixels are enough?

Well, the first observation is that the "megapixel" rating is the total number of picture elements divided by one million[5] whereas the "dots per inch" rating is the number of dots in a straight line across or down the picture and the relationship between them is not as direct as it might appear. A one megapixel image at a typical 1.5:1 aspect ratio is 836 pixels by 1254 pixels. (Nobody even remembers 1 megapixel phone cameras these days, but they did exist). My Nikon D200 gave 2592 pixels by 3872 pixels - about three times the dots per inch but ten times the megapixels. Note therefore the one megapixel camera would give an image like the 100 dpi sample earlier, approximately - an image only slightly dulled compared to the ten megapixel D200 given identical optics and sensor technology.

From a pure resolution standpoint, that wasn't what the DSLR manufacturers wanted you to hear. It led to some mistaken smugness in phone and compact camera users and certainly hurt the camera industry.

Cheaper cameras, in mobile phones and the like, rarely have high quality optics and give a high level of fuzziness with an absence of contrast, although recent advances by both Apple and Samsung make that significantly less true than it used to be. Software sharpening in them can be excessive, giving strange halos around objects.

More importantly, their sensors can be somewhat noise-prone, giving results like that shown below (I've increased the noise to make it really obvious to see - I am trying to illustrate the character of it) particularly in low light.

With all this confusion, it isn't difficult to understand why a simplistic idea like "more megapixels are better" took hold despite the fact that there's more to it than just megapixels. It also explains to a large extent why compact camera production has plunged to about a quarter of what it was in 2011 - their sensors and optics don't offer much advantage over a modern phone camera, but they can't make phone calls or access the internet. Arguments about improved quality are simply too nuanced to be heard.

Based on the observations above, it would seem that the size recommendations for digital images depend a great deal on the expectations of quality. Photographic print sizes are often given in inches and have varied historically with the size of paper available, although modern digital printing services now mean many "intermediate" sizes are available. The following table is calculated based on a number of common types of camera at the time of writing.

| Publishing (300 dpi) | Excellent (150 dpi) | Fractal scaled (30 dpi) | |

| 1 Megapixel[6] "old phone camera" |

2½" x 4" | 5" x 8" | 27" x 40" |

| 4 Megapixel[6] "cheap compact camera" |

5" x 8" | 10" x 15" | 55" x 80" |

| 8 Megapixel[6] "phone camera" |

7½" x 11" | 15" x 23" | 75" x 115" |

| 20 Megapixel[6] "digital SLR" |

12" x 18" | 24" x 36" | 120" x 180" |

| 22 Megapixel[6] "pro film scanner" |

12" x 18" | 25" x 38" | 127" x 190" |

| 100 Megapixel[6,7] "studio medium format" |

33" x 33" | 66" x 66" | 330" x 330" |

The very existence of this table also misses another big change over 20 years ago - nobody prints pictures any more. For use on a phone or tablet, actually a 1 Megapixel picture is just plenty.

I currently use a 12 Megapixel digital SLR body and a 22 Megapixel film scanner used with a film SLR body and Fuji Velvia professional slide film. Click here to read why I choose Fuji Velvia and how it compares to digital.

For convenience I almost always use the digital body. The workflow of wet chemical development followed by digital scanning does give great quality, but digital development is very fast and not really any worse. The primary difference between the hobby SLR and the pro SLR is the ability to use a wider variety of lenses and accessories plus improved control of digital image processing, rather than a meaningful difference in resolution.

About the only real disadvantage of the digital body is that the same sensor is used all the time to capture the image. If lenses are changed frequently, dust ingress is inevitable and cumulative. There is quite a lot of paranoia on the various fora in which such things are discussed, but I have never subscribed to it. Check out my quick illustrated guide on sensor cleaning. (Dust does occur on film, but since the film is replaced for each photograph, it tends not to be cumulative. Dust can be cleaned off developed film with a plenty of water and a gentle finger, then it needs to be hung to completely dry before being scanned or put into an enlarger.)

For full-size advertising work, there is no substitute for medium or large format and the current darling of the industry is the Phase One XF100 digital system, giving astonishing resolution combined with digital convenience and speed.

File Types

All of the above has presumed that the image data is not corrupted in any way. If each image were stored in its entirety, the 10 megapixel image from the D200 would be 10,000,000 pixels x 3 channels (red, green, blue) x 1 byte, or 30 million bytes[8].

A long time ago, in a galaxy far, far away, the Joint Photographic Experts Group laid down a data compression protocol that remains in widespread use and it discards some image data to compress the image. The exact amount of compression achieved varies on an image-by-image basis but the key idea is that some information is irretrievably discarded. Many software tools read JPEG files and many cameras have settings that adjust how much data is discarded at the time of recording the image - these are invariably referred to as "quality" in some way. Adobe Photoshop has a number of different quality indices that can be selected to allow the user to prioritise file size or image quality, or to strike a balance between them. The base image has a quality index of just over 8 in Photoshop terms, where 1 is the smallest file and 12 is the best quality. In order to demonstrate the effect of discarding data, the same sample of image was saved with a quality setting of 1 - the lossiest setting for the JPEG compression algorithm and is shown below.

In the lower quality version (right), a different type of blocky appearance can be seen. These are not the same as pixel blocks that were seen earlier when the image was down-sampled but are known as "artefacts" of the compression algorithm. The visible block spans multiple picture elements and all semblance of information regarding skin texture and iris detail is lost.

Using lower quality JPEG images is common for web-based items where file-size is at a premium, but for high quality prints there is no real justification for moving away from high quality files.

For a long time now, I have shot exclusively in an image format known as uncompressed RAW, in which very little processing and image manipulation is carried out by the camera. Some controversy has surrounded the Nikon RAW format, which produces a file of either 15 MB that is uncompressed or of 9 MB declared as a "virtually lossless" compression from the D200. In the compressed version, the 0 to 4095 range with which the original data is recorded is reduced to a 0 to 683[9] range and biased towards the highlights in the image - in other words shadow detail is considered secondary to highlight detail; this is described as a "lossy encoding" in that some colour depth[10] is discarded. Whether or not the lossy encoding is used, the data is compressed with a non-lossy algorithm to reduce the file sizes to final amounts.

Notwithstanding the file size disadvantage, the direct capture of images in JPEG format remains unsatisfactory for me. Minor errors in exposure and colour balance are more easily corrected with the greater data resolution offered by RAW, and when postprocessing for punchy black and white images, the extra information in the RAW file produces more flowing results. The RAW images are handled in Adobe Lightroom for review and initial processing to high quality JPEGs; there is no workflow advantage to the smaller JEG files at all. Further detailed processing is carried out in Photoshop if I want to, say, perform HDR processing or stitch pictures into a panorama.

I still tend to export images in the JPEG format because I have the sense it will stand the test of time (some camera RAW formats are already declared unsupported) but with suitably high quality.

Conclusions

- It depends how big you want to print - 2½ Megapixels is enough for normal size (6" x 4") prints and 1 Megapixel is fine for the web

- It's not the Megapixels, it's the noise

- All image files are not the same

- Shooting RAW files gives good control later

Damian Harty

Damian is a trained engineer with Bachelor and Master's degrees and a Fellow of the Institution of Mechanical Engineers. This goes some way to explain his inability to simply use technology but his preference rather to deconstruct it and write down what he finds. And then use it.

[1] JPEG stands for "Joint Photographic Experts Group" and is a commonly used expression to denote the data compression format used for images that maximises perceived quality for a given file storage space. It is described well in http://en.wikipedia.org/wiki/JPEG. Note that the data format is more strictly known as JFIF ("JPEG File Interchange Format") but this is rarely used.

[2] http://en.wikipedia.org/wiki/Fractal has a great description of fractals and http://en.wikipedia.org/wiki/Fractal_compression lifts the lid on some of the waffle surrounding their use with images.

[3] "The sampling frequencies of 44.1 and 44.056 kHz were thus the result of a need for compatibility with the NTSC and PAL video formats used for audio storage at the time." - The Compact Disc Story, Kees A. Schouhamer Immink, Journal of the Audio Engineering Society Vol 48, No 5, May 1998, pages 458ff.

[4] "CSI - Crime Scene Investigation" - a US-made television programme that features heavy Nikon product placement and implausible data processing in many forms. But the geeks win, so it's not all bad.

[5] Some sources give 1,048,576 instead of exactly 1 million; the former number is 220 and more convenient with binary computers.

[6] Megapixel camera ratings are systematically exaggerated by including data captured by the sensor and not included in the final image, often by around 10%; these numbers are based on the image itself.

[7] Medium format cameras traditionally give square images on 2½" x 2½ (6cm x 6cm) film; digital databacks for these cameras often preserve the square image format.

[8] Rather confusingly, 30 million Bytes turns out to be 28.6 MegaBytes since one MegaByte is 1,048,576 Bytes as mentioned earlier. It seems that file sizes, where big is bad, are based on the binary numbers to make them smaller, and megapixels, where big is good, are based on decimal numbers to make them bigger. There is no fact that cannot be selectively reported by marketing staff.

[9] The encoding is described at http://www.nikonians.org/dcforum/DCForumID86/16055.html#0; "Nikonians" is well organised forum but cannot be regarded as utterly definitive. Nevertheless the published information does seem to fit the facts well.

[10] Colour depth is the number of different shades of a given colour that can be represented in the image (whether or not these can actually be discerned by the observer).

© 2006-2016 Damian Harty. Design by Andreas Viklund.